Got to messing around a bit more with awd-lstm-lm … and by modifying the poetry generation loop, — so that it reloads the model with a new seed each iteration, — used the time it reloads as an organic tiny delay. The result is like a poem flick : every 300 ms (on average, on this machine) the system generates 222 words.

Is it presumptuous to call these 222 word bursts, poetry?

A talented intellectual friend, refers to them as nonsense. It’s an assessment I can accept. But within these nonsensical rantings are such lucid hallucinatory fragments that the act of writing poetry under such circumstances (rather than waiting for wings of inspiration, or the tickle of some conceptual tongue) becomes more geological, an act of patient sifting, weaving dexterity applied to the excess, editing/panning for nuggets among an avalanche of disconnected debris.

If some nuance of intimacy is buried in the process so be it; the muses are (often) indifferent to the sufferings of those who sing the songs; these epic sessions in all their incoherence signal an emergent rupture in the continuum of creativity.

Yet the lack of coherence does also signal limit-case challenges to so-called deep learning: context and embodiment. Poems are creatures that encompass extreme defiant agility in terms of symbolic affinities, yet they also demonstrate embodied coherence, swirl into finales, comprehend the reader. Without the construction of a functional digital emulation of emotional reasoning (as posited by Rosalind Picard and Aaron Sloman among others) that is trained on a corpus derived from embodied experience, such poetry will remain gibberish, inert until massaged by the human heart. So it is.

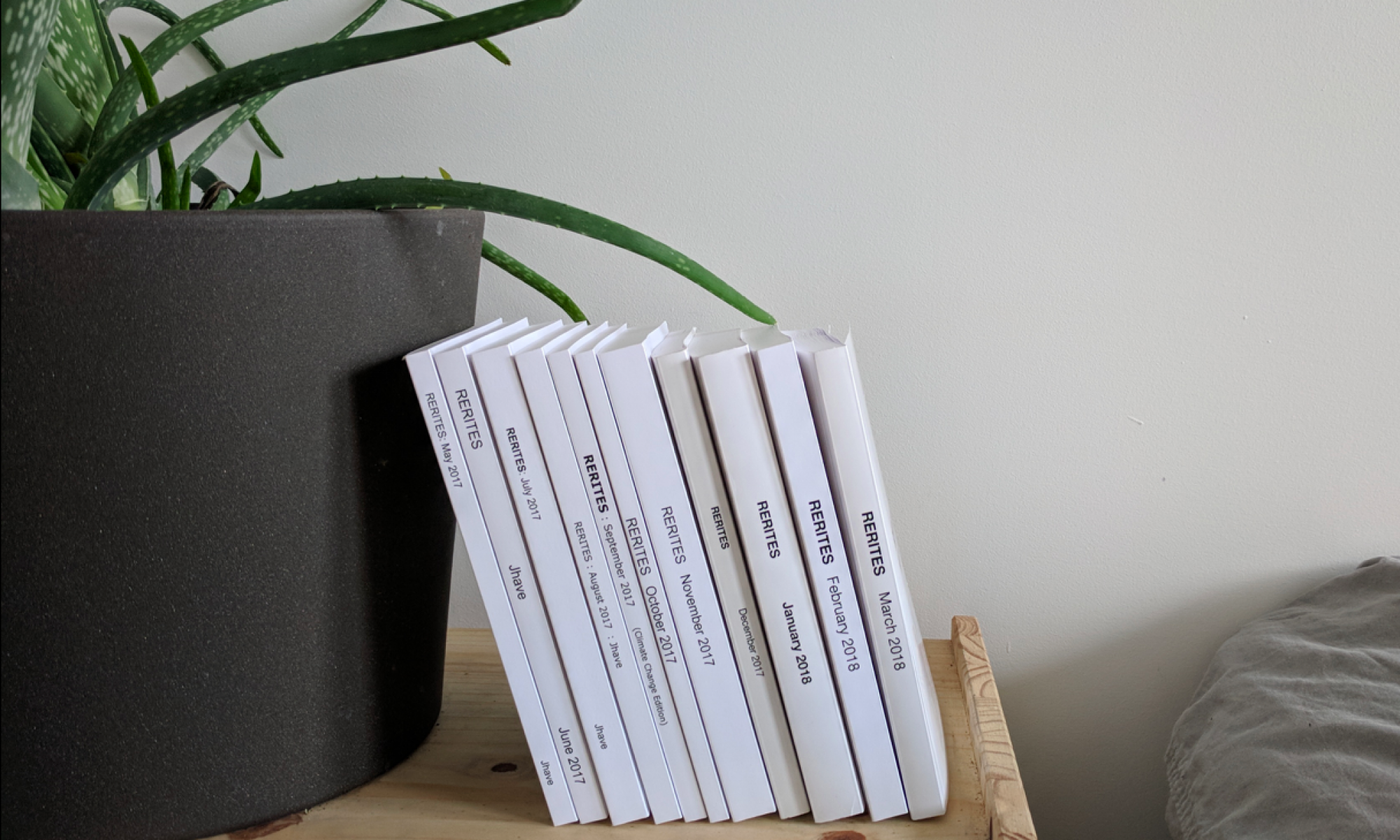

These poems will be used as the source text for January’s RERITES.

Video:

Text:

generated-2017-12-15T12-29-56_Screencast 2017-12-15 14:21:42_CL

Description:

Averaged Stochastic Gradient Descent with Weight Dropped QRNN Poetry Generation

Trained on 197,923 lines of poetry & pop lyrics.

Poetry sources: a subset of Poetry Magazine, Jacket2, 2 River, Capa, Evergreen Review, Cathay by Li Bai, Kenneth Patchen, Maurice Blanchot, and previous Rerites.Lyric sources: Bob Marley, Bob Dylan, David Bowie, Tom Waits, Patti Smith, Radiohead.

+Tech-terminology source: jhavelikes.tumblr.com,

+~+

Library: PyTorch

(word-language-model modified by Salesforce Research)

https://github.com/salesforce/awd-lstm-lm

+~+

Mode: QRNN

Embedding size: 400

Hidden Layers: 1550

Batch size: 20

Epoch: 478

Loss: 3.62

Perplexity: 37.16

Temperature range: 0.8 to 1.2

System will generate 222 word bursts, perpetually, until stopped.

SYSTEM OUTPUT :

REAL-TIME generation on TitanX GPU

re-loading model every poem

fresh with a new RANDOM SEED.

~ + ~

Code:

(awd-py36) jhave@jhave-Ubuntu:~/Documents/Github/awd-lstm-lm-master$ python generate_INFINITE_RANDOM-SEED_mintmaxt.py --cuda --words=222 --checkpoint="models/SUBEST4+JACKET2+LYRICS_QRNN-PBT_Dec11_FineTune+Pointer.pt" --model=QRNN --data='data/dec_rerites_SUBEST4+JACKET2+LYRICS' --mint=0.8 --maxt=1.2(awd-py36) jhave@jhave-Ubuntu:~/Documents/Github/awd-lstm-lm-master$ python generate_INFINITE_RANDOM-SEED_mintmaxt.py --cuda --words=222 --checkpoint="models/SUBEST4+JACKET2+LYRICS_QRNN-PBT_Dec11_FineTune+Pointer.pt" --model=QRNN --data='data/dec_rerites_SUBEST4+JACKET2+LYRICS' --mint=0.8 --maxt=1.2