Mead recipe: dilute honey with water, stir twice a day, wait.

Fermentation begins after 24-48 hours. After a week, the fermented honey-wine (mead) can be enjoyed green, low in alcohol yet lively with essence. Or you can let it continue.

Generative-poetry recipe: text-corpus analysed by neural net, wait.

After each reading of the corpus (a.k.a. ‘training epoch’), a neural net can produce/save a model. Think of the model as an ecosystem produced by fermentation, an idea, a bubbling contingency, a knot of flavours, a succulent reservoir, a tangy substrate that represents the contours, topology or intricacies of the source corpus. Early models ( a model is usually produced after the algorithm reads the entire corpus; remember, each reading of the corpus is called an epoch; after 4-7 epochs the models are still young) may be obscure but are often buoyant with energy (like kids mimicking modern dance).

Here’s an example from a model that is only 2 epochs old:

Daft and bagel, of sycamore.

“Now thou so sex–sheer if I see Conquerours.So he is beneath the lake, where they pass together,

Amid the twister that squeeze-box fire:

Here’s an example from a model that is 6 epochs old:

Yellow cornbread and yellow gems

and all spring to eat.Owls of the sun: the oldest worm of light

robed in the advances of a spark.

Later models (after 20 epochs), held in vats (epoch after epoch), exhibit more refined microbial (i.e. algorithm) calibrations:

Stale nose of empty lair

The bright lush walls are fresh and lively and weeping,

Whirling into the night as I standunicorn raging on the serenade

Of floors of sunset after evil-spirits,

adagio.

Eventually fermentation processes halt. In the case of mead, this may occur after a month; with neural nets, a process called simulated annealing intentionally decreases the learning rate every iteration; so the system begins by exploring large features then focuses on details. Eventually the learning rate diminishes to zero. Learning (fermentation) stops.

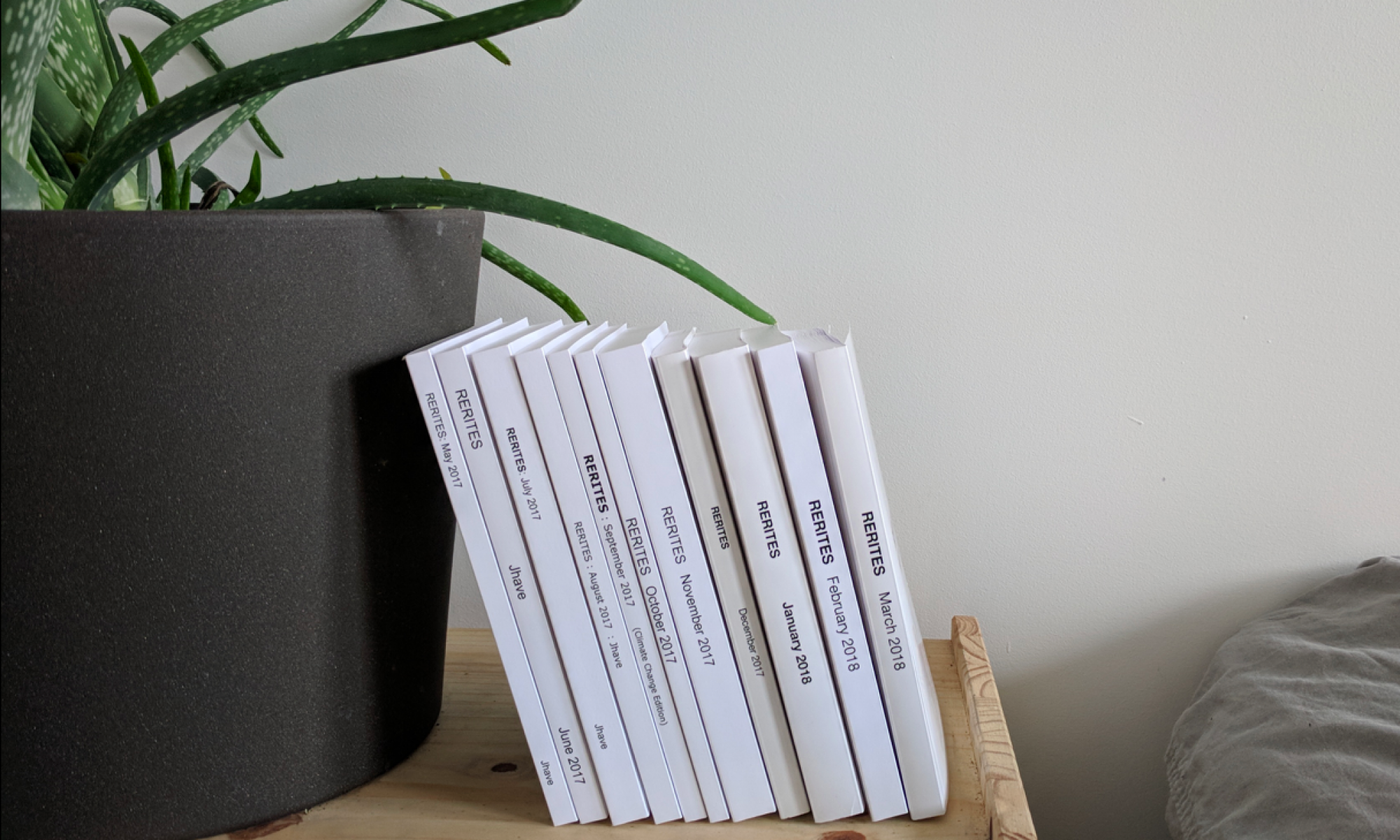

TEXT FILES

2017-04-30 09:49:10_EPOCH-6 2017-04-30 13:27:15_EPOCH-16 2017-04-30 23:03:42_EPOCH-4 2017-05-03 09:41:35_2017-05-02T06-19-17_EPOCH22 2017-05-03 12:54:57_2017-05-02T06-19-17_EPOCH-6 2017-05-12 12:24:54_2017-05-02T06-19-17_EPOCH22

2017-05-13 21:24:06_2017-05-02T06-19-17_-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_7-loss_6.02-ppl_412.27 2017-05-14 23:51:14_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_8-loss_6.03-ppl_417.49 2017-05-15 11:00:03_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_3-loss_6.09-ppl_439.57 2017-05-15 11:28:24_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_4-loss_6.04-ppl_421.71 2017-05-15 11:53:40_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_5-loss_6.02-ppl_413.64 2017-05-15 21:08:56_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_9-loss_6.02-ppl_409.87 2017-05-15 21:48:55_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_10-loss_6.03-ppl_416.36 2017-05-15 22:21:11_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_11-loss_6.02-ppl_413.61 2017-05-15 22:48:53_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_12-loss_6.03-ppl_414.69 2017-05-15 23:24:25_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_13-loss_6.03-ppl_417.08 2017-05-15 23:53:21_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_14-loss_6.03-ppl_416.68 pytorch-poet_2017-05-02T06-19-17_models-6-7-8-9-10-11-12-13-14 2017-05-16 09:26:18_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_15-loss_6.03-ppl_416.602017-05-16 10:08:15_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_16-loss_6.03-ppl_414.912017-05-16 10:45:58_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_17-loss_6.03-ppl_415.35 2017-05-18 23:48:36_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_18-loss_6.03-ppl_414.102017-05-20 10:35:17_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_19-loss_6.03-ppl_413.682017-05-18 22:10:36_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_17-loss_6.03-ppl_415.35 2017-05-20 22:01:22_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_20-loss_6.02-ppl_413.082017-05-21 12:58:03_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_21-loss_6.02-ppl_413.072017-05-21 14:38:29_model-LSTM-emsize-1500-nhid_1500-nlayers_2-batch_size_20-epoch_22-loss_6.02-ppl_412.68