Yesterday, sitting in Hong Kong, I launched a cluster of GPUs in North Carolina and ran a neural net for 9 hours to generate proto-language.

Using modified code from the Keras example on LSTM text generation (and aided by a tutorial on aws-keras-theano-tensorflow integration), the cluster produced 76,976 words.

Many of these words are new and never-seen-before. It’s like having Kurt Schwitters on tap.

mengaporal concents

typhinal voivat

the dusial prespirals

of Inimated dootion

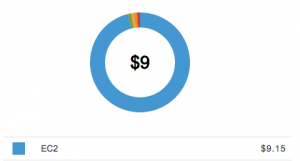

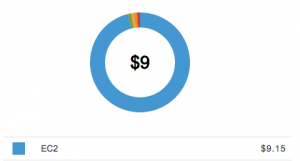

Here is my bill for over 11 hours of GPU cluster time:

Neural nets learn through exposure to words just like babies. The more they hear, the better they get. For preliminary testing of the code, a 2-layer 256-cell LSTM neural net was trained on a source text of moderate size: a draft of my book, in a 430kb text file format. So it’s as if a baby was exposed to only critical literary theory, no kids books, no conversation, just theory.

The results can be very very strange:

in a way of stumalized scabes occurs forms. and then paradigm for some control implicit and surjace of need)

And they evolve over time from simplicity:

and conceptual poetry and conceptual poetry in the same that the same that the same that the same that has been are conceptual poetry and conceptual process of the static entity and conceptual sourd subjects that in the same of the same that digital poetry in the static and conceptual sourd source of the static entity and conceptual sourd source of the static entity and conceptual poetry in the station of the static environment for the station of a section of a section of a section of a section of a section of a section of a section of a section of a section of a section of a section of a section of a section of a section of a

… to complexity

u archetypht]mopapoph wrud mouses occurbess, the disavil centory that inturainment ephios into the reputiting the sinctions or this suinncage encour. That language, Y. untiletterforms, bear. and matter a nalasist relues words. this remagming in nearogra`mer struce. (, things that digital entibles offorms to converaction difficued harknors complex the sprict but the use of procomemically mediate the cunture from succohs at eyerned that is cason, other continuity. As a discubating elanted intulication action, these tisting as sourdage. Fore?is pobegria, prighuint, take sculptural digital cogial into computers to Audiomraphic ergeption in the hybping the language. /Ay it bodies to between as if your this may evorv: and or all was be as unityle/disity 0poeliar stance that shy. in from this ke

It is important to recognize, the machine is generating words character by character, — it has never seen a dictionary; it is not given any knowledge of grammar rules; it is not preloaded with words or spelling. This is not random replace or shuffling, it is a recursive groping toward sense, articulate math, cyber-toddler lit. And it is based on a 9 hour exposure to a single 200 page book with an intellectual idiom. More complex nets based on vast corpuses trained over weeks will surely produce results that are astonishing.

Yet I somehow feel that there is a limit to this (LSTM) architecture’s capacity to replicate thought. Accuracy is measured through a loss function that began at 2.87 and over 46 runs (known as epochs) loss descended to 0.2541. A kind of sinuous sense emerges from the babble but it is like listening to an impersonation of thought done in an imaginary language by an echolaliac. Coherency is scarce.

Code & Complete Output

Code is on github.

Complete 80k word output saved directly from the Terminal: here.

Excerpts

Do you like nonsense? Nostalgic for Lewis Carrol?

Edited excerpts (I filtered by seeking neologisms then hand-tuned for rhythm and cadence) output here. Which includes stuff like:

In the is networks, the reads on Gexture

orthorate moth process

and sprict in the contrate

in the tith reader, oncologies

appoth on the entered sure

in ongar interpars the cractive sompates

betuental epresed programmeds

in the contiele ore presessores

and practions spotute pootry

in grath porming

phosss somnos prosent

E-lit Celebrity Shout-Out

Of course as the machine begins to learn words (character by character assembling sense from matrices of data), familiar names (of theorists or poets cited in my book) crop up among the incoherence.

in Iteration 15 at diversity level 0.2:

of the material and and the strickless that in the specificient of the proposed in a strickles and subjective to the Strickland of the text to the proposing the proposed that seem of the stricklingers and seence and the poetry is the strickland to a text and the term the consider to the stricklinger

in Iteration 26 at diversity level 1.0:

sound divancted image of Funkhouser surfaleders to dexhmeating antestomical prosting

in Iteration 34 at diversity level: 0.6

Charles Bernstein

Fen and Crotic

unternative segmentI

spate, papsict feent

& in Iteration 29 at diversity level 1.1:

Extepter Flores of the Amorphonate

evocative of a Genet-made literary saint among the rubble of some machinic merz, Leo has adopted temporarily his cybernetic moniker: